In the fast-evolving world of artificial intelligence (AI), the demand for faster, more efficient, and scalable computing power has never been greater. Nvidia, a global leader in GPU (Graphics Processing Unit) technology, has once again raised the bar with its latest GPU designed specifically for AI data centers. This cutting-edge innovation is not just a step forward—it’s a leap into the future of AI-driven computing. In this article, we’ll explore Nvidia’s newest GPU, its groundbreaking features, and how it is poised to transform AI data centers across the USA.

The AI Boom and the Need for Advanced GPUs

Artificial intelligence has become the backbone of modern technology, powering everything from autonomous vehicles and healthcare diagnostics to natural language processing and financial modeling. As AI models grow in complexity and size, the computational requirements to train and deploy these models have skyrocketed. Traditional CPUs (Central Processing Units) are no longer sufficient to handle the massive workloads required for AI, and this is where GPUs come into play.

Nvidia has long been at the forefront of GPU innovation, and its latest offering is specifically tailored to meet the demands of AI data centers. These data centers, which serve as the nerve centers for AI development and deployment, require unparalleled processing power, energy efficiency, and scalability. Nvidia’s newest GPU delivers on all fronts, making it a game-changer for the AI industry.

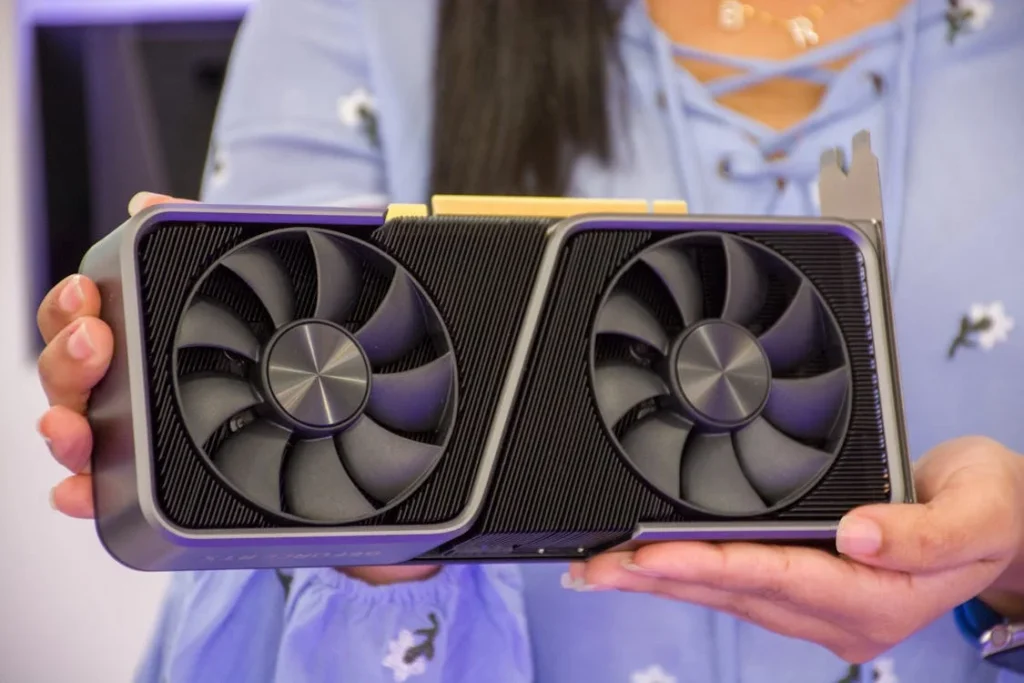

Introducing Nvidia’s Latest GPU: A Visual and Computational Marvel

Nvidia’s latest GPU, part of its Hopper architecture (named after computer science pioneer Grace Hopper), is designed to tackle the most demanding AI workloads. This GPU, often referred to as the H100 Tensor Core GPU, is a powerhouse of performance, efficiency, and innovation. Let’s dive into its key features:

1. Unprecedented Performance

The H100 GPU is built on Nvidia’s advanced 4nm process technology, making it one of the most powerful GPUs ever created. It boasts:

- 18,432 CUDA Cores: These cores are optimized for parallel processing, enabling the GPU to handle multiple tasks simultaneously.

- Third-Generation Tensor Cores: These specialized cores are designed for AI workloads, delivering up to 6x faster performance compared to previous generations.

- FP8 Precision: A new data format that doubles the speed of AI training and inference while maintaining accuracy.

2. Scalability for AI Data Centers

The H100 GPU is designed to scale seamlessly, making it ideal for large-scale AI data centers. It supports:

- NVLink Switch System: This technology allows multiple GPUs to work together as a single unit, delivering up to 9x higher bandwidth than traditional PCIe connections.

- DGX H100 Systems: Nvidia’s integrated AI supercomputing platform, which combines eight H100 GPUs into a single system, offering 32 petaflops of AI performance.

3. Energy Efficiency

AI data centers consume vast amounts of energy, and Nvidia has addressed this challenge head-on. The H100 GPU features:

- Advanced Cooling Solutions: Designed to reduce energy consumption while maintaining optimal performance.

- Dynamic Power Management: Automatically adjusts power usage based on workload demands, ensuring maximum efficiency.

4. AI-Specific Innovations

The H100 GPU is packed with features tailored for AI applications:

- Transformer Engine: Optimized for transformer-based models (like GPT and BERT), it accelerates training and inference by up to 30x.

- Confidential Computing: Ensures data security and privacy, a critical feature for industries like healthcare and finance.

Why This GPU Matters for AI Data Centers in the USA

The USA is home to some of the world’s largest and most advanced AI data centers, operated by tech giants like Google, Amazon, Microsoft, and Meta. These data centers are the backbone of AI innovation, supporting everything from cloud computing to machine learning research. Nvidia’s latest GPU is a perfect fit for these facilities, offering:

1. Accelerated AI Development

With its unmatched performance, the H100 GPU enables researchers and developers to train AI models faster than ever before. This acceleration is crucial for staying competitive in the global AI race, where speed and efficiency are key.

2. Cost Efficiency

By reducing the time and energy required for AI training, the H100 GPU helps data centers lower operational costs. This is particularly important in the USA, where energy prices and infrastructure expenses can be high.

3. Support for Next-Gen AI Models

As AI models become more complex, traditional hardware struggles to keep up. The H100 GPU is designed to handle the latest advancements in AI, including large language models, generative AI, and real-time analytics.

4. Sustainability

With its focus on energy efficiency, the H100 GPU aligns with the growing demand for sustainable computing solutions. This is especially relevant in the USA, where companies are increasingly prioritizing environmental responsibility.

Real-World Applications of Nvidia’s Latest GPU

The H100 GPU is already making waves across various industries in the USA. Here are a few examples of how it’s being used:

1. Healthcare

AI-powered diagnostics and drug discovery require immense computational power. The H100 GPU enables researchers to analyze vast datasets and develop life-saving treatments faster than ever before.

2. Autonomous Vehicles

Training self-driving car algorithms involves processing massive amounts of sensor data. The H100 GPU accelerates this process, bringing us closer to a future of safe and efficient autonomous transportation.

3. Financial Services

From fraud detection to algorithmic trading, the financial industry relies heavily on AI. The H100 GPU provides the speed and accuracy needed to handle complex financial models in real time.

4. Natural Language Processing

Companies like OpenAI and Google are using the H100 GPU to train advanced language models, enabling breakthroughs in chatbots, translation, and content generation.

Challenges and Considerations

While Nvidia’s latest GPU is a technological marvel, it’s not without challenges:

- High Cost: The H100 GPU is a premium product, and its price tag may be prohibitive for smaller organizations.

- Infrastructure Requirements: To fully leverage its capabilities, data centers may need to upgrade their infrastructure, including cooling systems and power supplies.

- Supply Chain Constraints: Like many advanced technologies, the H100 GPU may face supply chain challenges, particularly in the current global economic climate.

The Future of AI Data Centers with Nvidia

Nvidia’s latest GPU is more than just a piece of hardware—it’s a catalyst for innovation. As AI continues to reshape industries, the H100 GPU will play a pivotal role in driving progress. For AI data centers in the USA, this means faster development cycles, lower costs, and the ability to tackle increasingly complex challenges.

Looking ahead, Nvidia is likely to continue pushing the boundaries of GPU technology, with a focus on sustainability, scalability, and performance. As the AI revolution unfolds, one thing is clear: Nvidia’s latest GPU is at the heart of it all, powering the future of computing in the USA and beyond.

Conclusion: A New Era for AI Computing

Nvidia’s latest GPU is a testament to the company’s commitment to innovation and excellence. With its unparalleled performance, energy efficiency, and AI-specific features, the H100 GPU is set to revolutionize AI data centers across the USA. Whether you’re a researcher, developer, or business leader, this GPU represents a new era of possibilities for AI-driven computing.

As the USA continues to lead the world in AI innovation, Nvidia’s latest GPU ensures that the country remains at the forefront of this transformative technology. The future of AI is here, and it’s powered by Nvidia